Today, we are releasing LFM2.5-1.2B-Thinking, a reasoning model that runs entirely on-device. It fits within 900 MB of memory on a phone and delivers both the fastest inference speed and the best quality for its size. What required a data center two years ago now runs offline in your pocket.

In addition, we are expanding our ecosystem by welcoming Qualcomm Technologies, Inc., Ollama, FastFlowLM, and Cactus Compute as new launch partners, joining our existing partners AMD and Nexa AI. These partnerships unlock powerful deployment scenarios across vehicles, smartphones, laptops, IoT, and embedded systems.

LFM2.5-1.2B-Thinking is available today on Hugging Face, LEAP, and our Playground.

Benchmarks

LFM2.5-1.2B-Thinking is the latest addition to the LFM2.5 family. It’s a 1.2 billion parameter model trained specifically for reasoning tasks. It generates thinking traces before producing answers, working through problems systematically. This model leverages the unique inference speed of LFMs to produce higher-quality answers.

Compared to LFM2.5-1.2B-Instruct, three capabilities jump dramatically: math reasoning (63→88 on MATH-500), instruction following (61→69 on Multi-IF), and tool use (49→57 on BFCLv3).

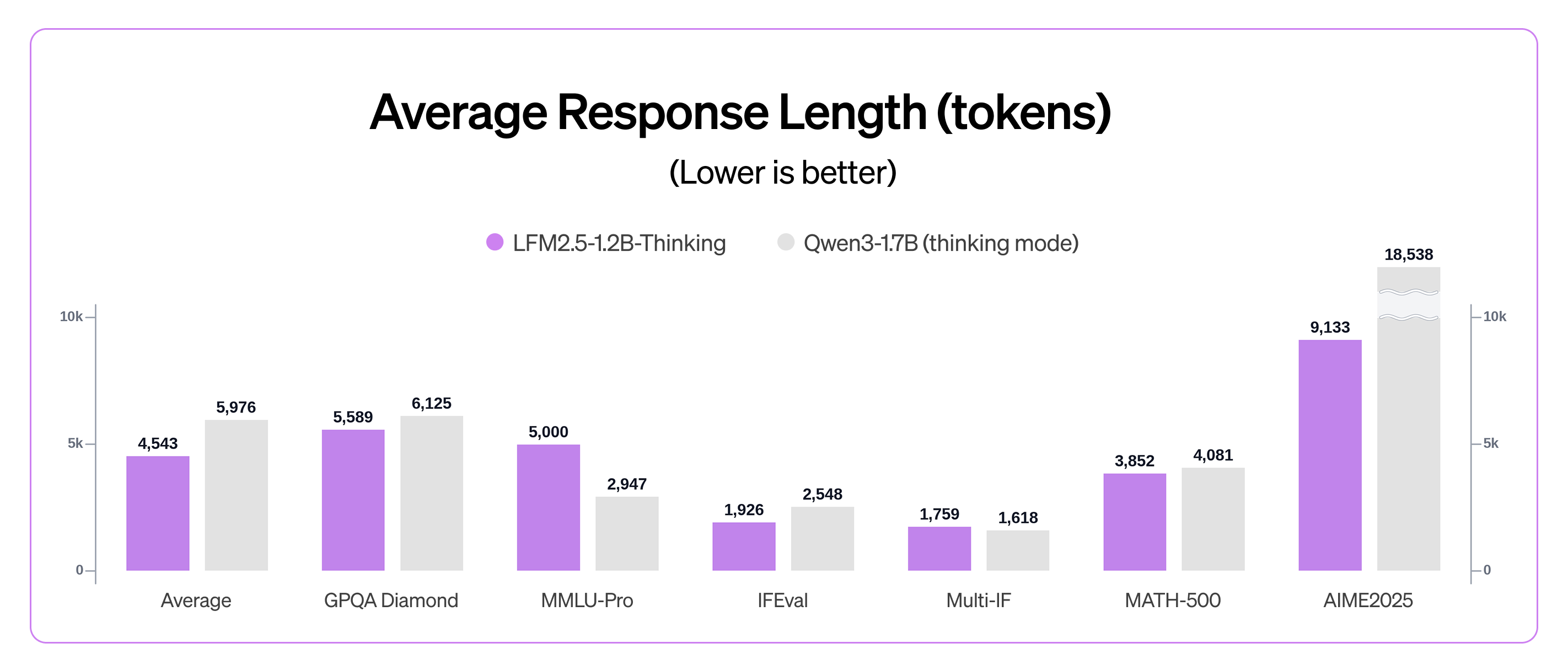

LFM2.5-1.2B-Thinking matches or exceeds Qwen3-1.7B on most reasoning benchmarks, despite having 40% fewer parameters. Moreover, it combines strong quality with efficient test-time compute. In comparison with Qwen3-1.7B (thinking mode), it requires fewer output tokens while offering higher overall performance.

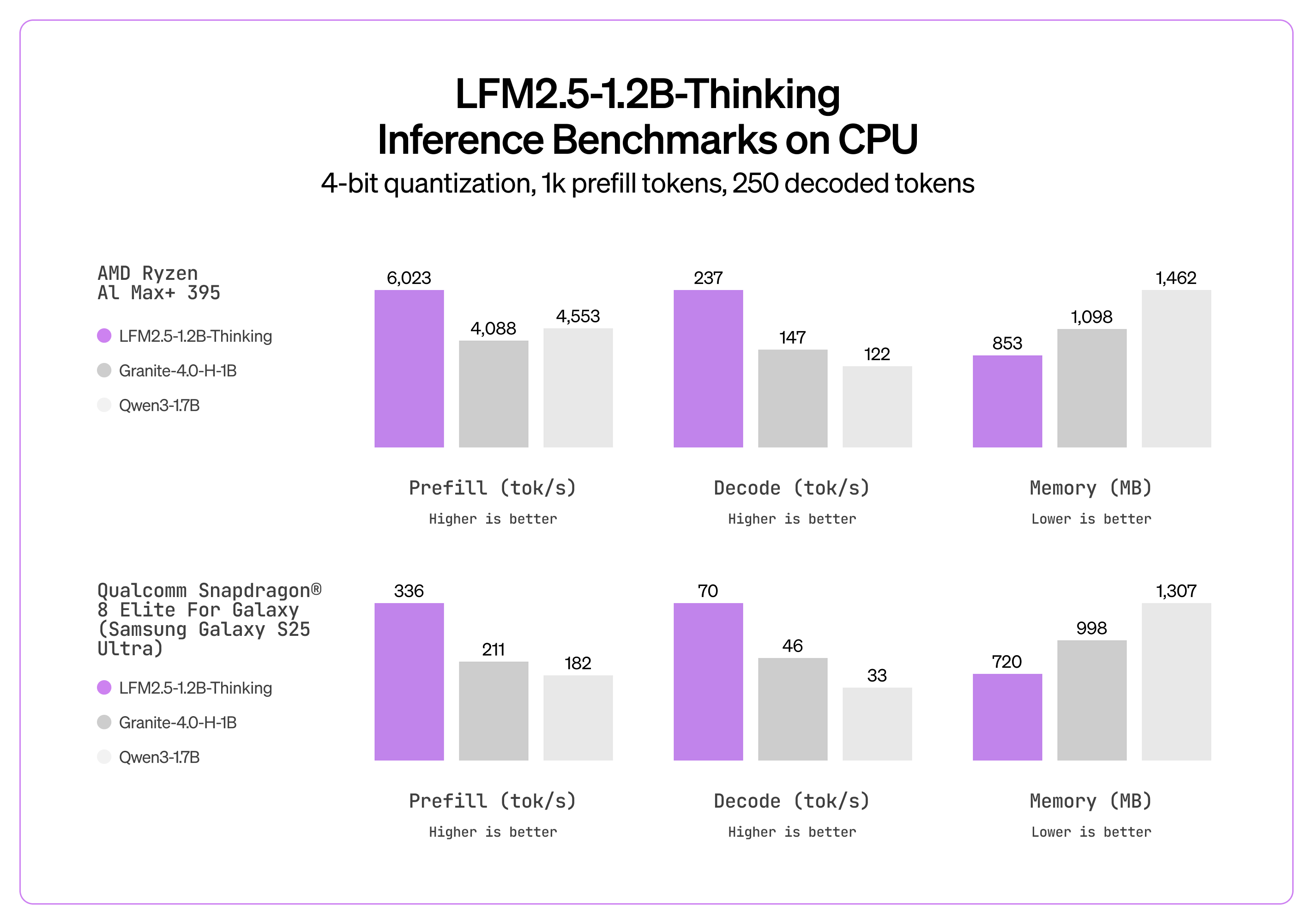

The performance gap widens even more at inference time, where LFM2.5-1.2B-Thinking outpaces not only Qwen3-1.7B but even hybrid architectures like Granite-4.0-H-1B in both speed and memory efficiency.

We found that LFM2.5-1.2B-Thinking shines on agentic and reasoning-heavy tasks (e.g., tool use, math, programming). When the model needs to plan a sequence of tool calls, verify intermediate results, and adjust its approach, the reasoning traces provide real value. However, we recommend using LFM2.5-1.2B-Instruct for chat capabilities and creative writing.

Training Recipe

Building capable small thinking models requires augmenting limited knowledge capacity through multi-step reasoning while keeping concise answers for low-latency edge deployment.

Prior experiments with LFM-1B-Math showed that including reasoning traces during midtraining helps models internalize the "reason first, then answer" pattern. Supervised fine-tuning (SFT) on synthetic reasoning traces further enables reliable chain-of-thought generation, eliminating the need for a specific format reward.

However, SFT doesn't fix a common issue with reasoning models: getting stuck in repetitive text patterns instead of reaching a conclusion. This behavior is commonly referred to as “doom looping.” We mitigated it with a straightforward approach:

- During preference alignment, we generated 5 temperature-sampled and 1 greedy candidates from the SFT checkpoint; the chosen response was the highest-scoring candidate under an LLM judge, while the rejected response was the lowest-scoring candidate when no loop was present, or a looping candidate whenever a loop occurred (regardless of judge score).

- During RLVR, we further discouraged looping early in training by applying an n-gram-based repetition penalty.

This approach trivially reduced the percentage of doom loops from 15.74% (mid-training) to 0.36% (RLVR) on a dataset of representative prompts.

Our RL pipeline is built on an internal fork of verl [1] and focuses on critic-free, group-relative policy-gradient optimization (GRPO-style). The base implementation is reference-free and we incorporate techniques such as asymmetric ratio clipping, dynamic filtering of zero-variance prompt groups, overlong-sample masking, no advantage normalization, and truncated importance sampling (e.g., [2-6]). Individual configurations are tuned per target domain, where verifiable tasks utilize rule-based rewards and open-ended prompts rely on generative reward models. We further explored cross-tokenizer on-policy distillation either as a primary or auxiliary objective, where preliminary results did not point towards significant benefit.

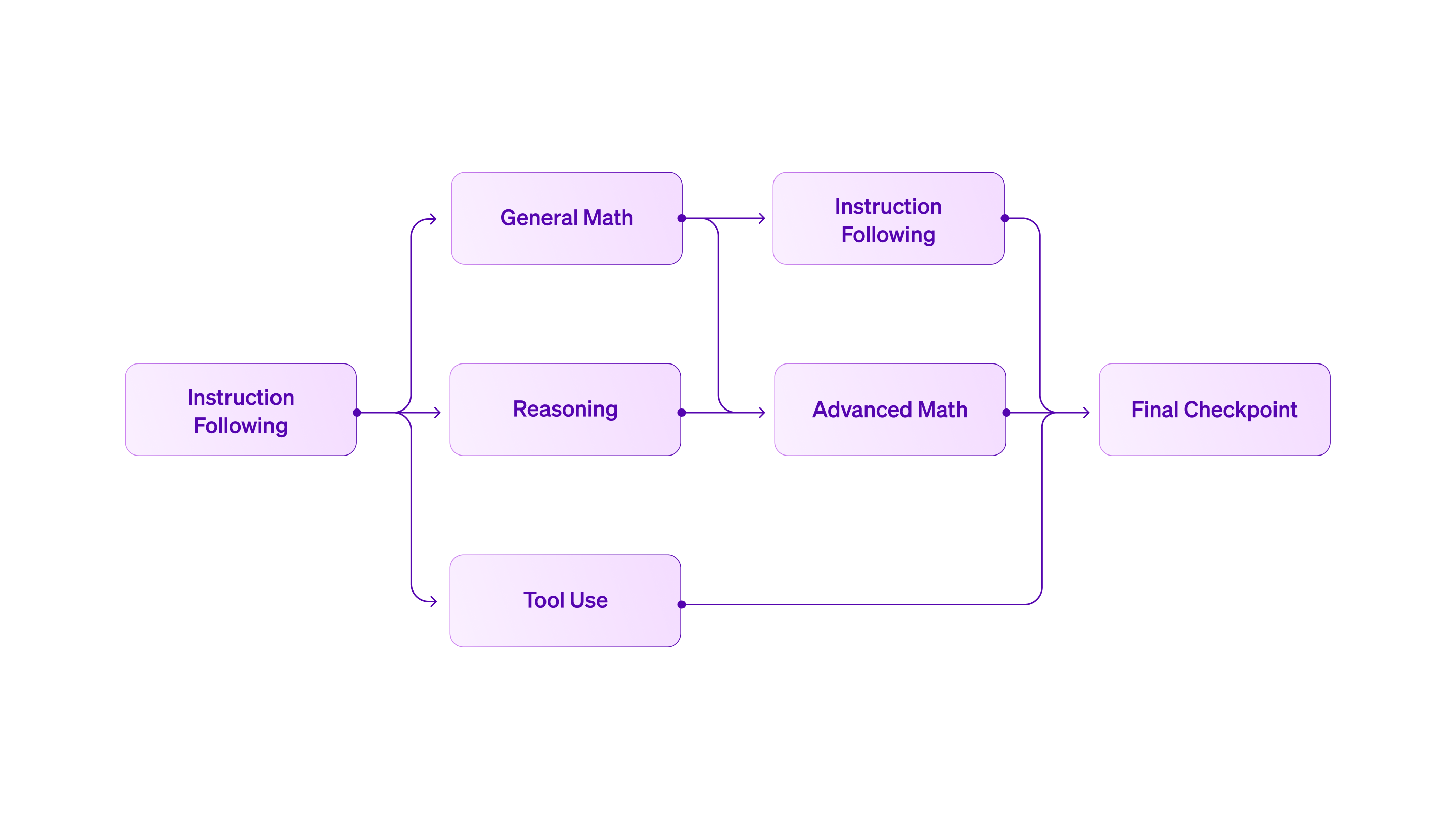

We adopted a curriculum RL approach with a highly parallel structure. Rather than training a single model on multiple domains simultaneously, we start with instruction following RLVR as our foundation, then branch out to create domain-specific checkpoints for reasoning, math, and tool use.

This parallel approach contrasts with the traditional method of training a single model on all domains at once, which often leads to capability interference and makes it difficult to diagnose regressions. Our curriculum allows for finer control: each domain-specific model can be optimized independently with its own reward shaping, hyperparameters, and evaluation criteria. We then apply iterative model merging at different steps to create new checkpoints with a balanced mixture of target capabilities. For example, after tool use-focused training, we merge with an earlier math-optimized checkpoint to recover any degraded math reasoning performance. This flexibility lets us navigate capability trade-offs more effectively than pure sequential training.

The approach is also more efficient for a small, focused team. Independent workstreams can iterate quickly on their respective domains without blocking each other. Contributions are easily combined through merging rather than coordinated joint training runs. We found that model merging preserves overall performance while integrating specialized improvements, making it a practical path to scale general-purpose RLVR.

Expanding the LFM Ecosystem

You can fine-tune LFM2.5 today with TRL and Unsloth. LFM2.5-1.2B-Thinking is also launching with day-zero support across the most popular inference frameworks, including llama.cpp, MLX, vLLM, and ONNX Runtime. All frameworks support both CPU and GPU acceleration across Apple, AMD, Qualcomm, and Nvidia hardware.

You can find the entire family of LFM2.5 models here:

To ensure the LFM2.5 family runs efficiently wherever you need it, we are rapidly expanding our hardware and software ecosystem by welcoming Qualcomm Technologies, Inc., Ollama, FastFlowLM, and Cactus Compute as new launch partners.

“Qualcomm Technologies, Inc. is proud to be a launch partner for Liquid AI’s open‑weight LFM2.5‑1.2B‑Thinking. With Nexa AI’s optimizations for our NPUs, developers can bring smarter, faster on‑device AI to Snapdragon‑powered devices, combining performance with privacy and reliability at the edge.”— Vinesh Sukumar, VP, Product Management, Qualcomm Technologies, Inc.

This expansion significantly broadens our hardware and software support: our existing partner, Nexa AI, delivers optimized performance for Qualcomm Technologies, Inc. NPUs, while FastFlowLM joins us to provide a specialized, high-performance runtime for AMD Ryzen™ NPU devices. Additionally, Ollama and Cactus Compute join as launch partners to enable seamless local and edge deployment workflows.

The table below demonstrates the inference acceleration achieved through these optimized implementations.

Inference Speed Benchmarks for LFM2.5-1.2B-Thinking

LFM2.5-1.2B-Thinking excels at long-context inference. For example, on AMD Ryzen™ NPUs with FastFlowLM, decoding throughput sustains ~52 tok/s at 16K context and ~46 tok/s even at the full 32K context, indicating robust long-context scalability. For more details on longer context benchmarks on AMD Ryzen™ NPUs with FastFlowLM, please review these here.

Get Started

We’re proud to announce that, since its release, the LFM2 family has crossed over 10 million downloads on Hugging Face.

-%20blog.png)

With LFM2.5, we're delivering on our vision of AI that runs anywhere. These models are:

- Open-weight — Download, fine-tune, and deploy without restrictions

- Fast from day one — Native support for llama.cpp, NexaSDK, Cactus Engine, LM Studio, Ollama, FastFlowML, MLX, and vLLM across Apple, AMD, Qualcomm Technologies, Inc., and Nvidia hardware

- A complete family — From base models for customization to specialized audio and vision variants, one architecture covers diverse use cases

The edge AI future is here. We can't wait to see what you build.

References

[1] Sheng, Guangming, et al. “HybridFlow: A Flexible and Efficient RLHF Framework.” arXiv, 2024.

[2] Ahmadian, Arash, et al. "Back to basics: Revisiting reinforce style optimization for learning from human feedback in llms." arXiv, 2024.

[3] Shao, Zhihong, et al. "Deepseekmath: Pushing the limits of mathematical reasoning in open language models." arXiv, 2024.

[4] Yu, Qiying, et al. "Dapo: An open-source llm reinforcement learning system at scale." arXiv, 2025.

[5] Liu, Zichen, et al. "Understanding r1-zero-like training: A critical perspective." arXiv, 2025.

[6] Yao, Feng, et al. “Your Efficient RL Framework Secretly Brings You Off-Policy RL Training.” Feng Yao's Notion, 2025.

Citation

Please cite this article as:

Liquid AI, "LFM2.5-1.2B-Thinking: On-Device Reasoning Under 1GB", Liquid AI Blog, Jan 2026.

Or use the BibTeX citation:

@article{liquidAI2026thinking,

author = {Liquid AI},

title = {LFM2.5-1.2B-Thinking: On-Device Reasoning Under 1GB},

journal = {Liquid AI Blog},

year = {2026},

note = {www.liquid.ai/blog/lfm2-5-1-2b-thinking-on-device-reasoning-under-1gb},

}

For enterprise deployments and custom solutions, contact our sales team. Read our technical report for implementation details.

GPQA, MMLU-Pro, IFBench, and AIME25 follow ArtificialAnalysis's methodology. For IFEval and Multi-IF, we report the average score across strict and loose prompt and instruction accuracies. For BFCLv3, we report the final weighted average score with a custom Liquid handler to support our tool use template.

.svg)