Why SLMs Are the Key to Scaling Agentic Workflows

For the last few years, the AI narrative has been dominated by a 'bigger is better' philosophy. We’ve watched parameters balloon into hundreds of billions or even trillions, driven by the assumption that raw scale is the only path to intelligence. While scale delivers capability, it also creates a deployment bottleneck. A shift is now happening because massive models are too heavy for every task. The future of AI, particularly in Agentic Workflows, is not about choosing one size, it’s about a hybrid approach."

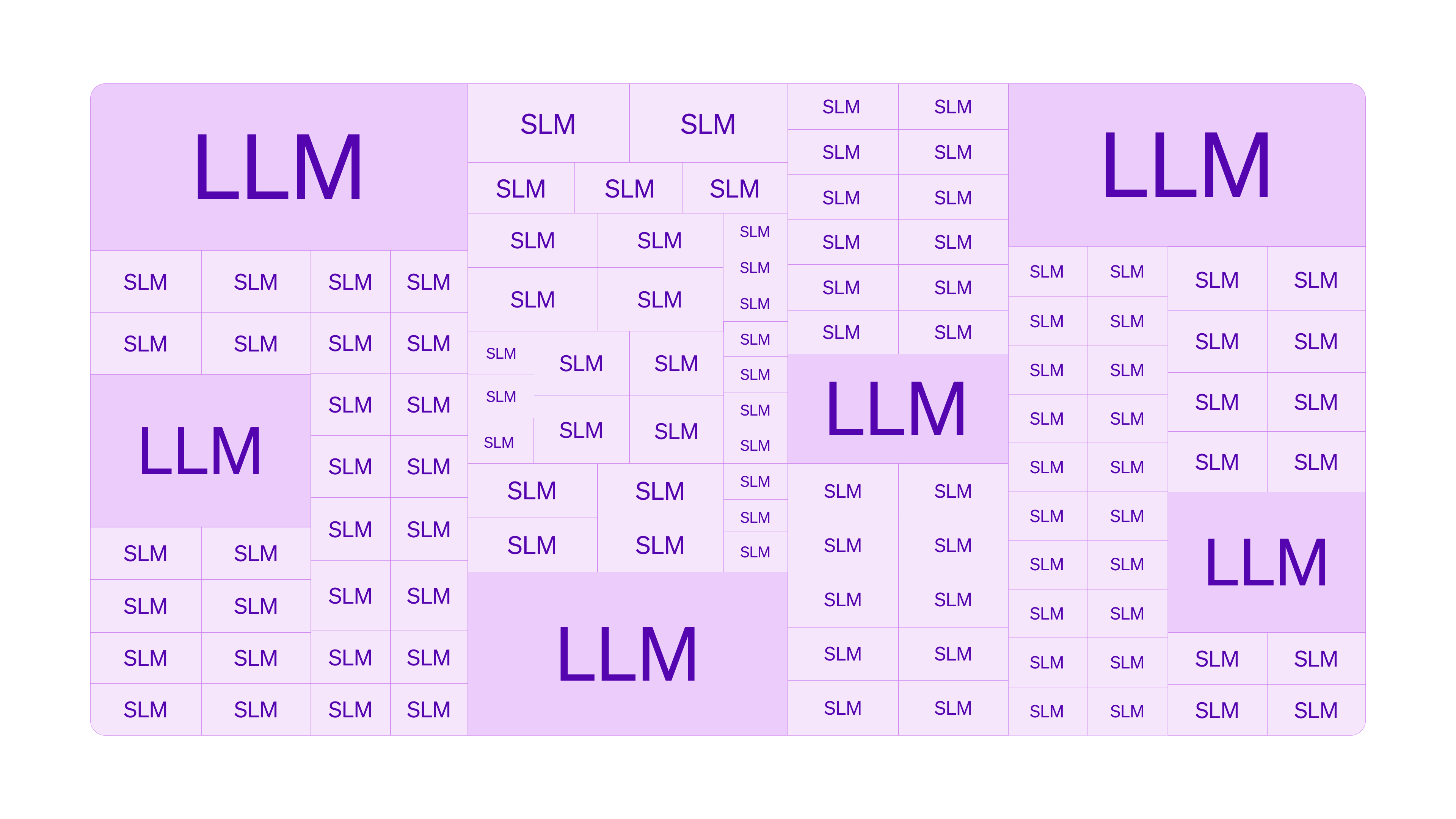

We are moving away from using a massive supercomputer for every single interaction and toward an ecosystem where Small Language Models (SLMs) like Liquid’s LFM2.5 tackle the bulk of execution, while Large Language Models (LLMs) handle tasks that require complex reasoning.

The "Corporate Structure" Problem

To understand why this shift is necessary, imagine a Fortune 500 logistics company. You wouldn't hire 1,000 executives to sort packages in the warehouse.

Sure, an SVP is capable of packing a box. But they are not built for speed. While the executive is still processing the 'big picture' of the supply chain, the warehouse specialist has already packed ten boxes. In AI terms, using a massive model for a simple task adds unnecessary latency; it can accomplish the job, but it lags behind a nimble specialist.

Current AI adoption looks like a company full of executives.

We are currently using massive, reasoning-heavy LLMs (the "Executive”) to perform simple tasks like extracting information from documents or routing customer tickets. It’s inefficient, slow, and costly.

The Hybrid Future of Agentic AI:

- The LLM (The Executive): Handles high-level reasoning, complex planning, ambiguity, and strategy. You only need a few.

- The SLM (The Specialist): Handles focused tasks like document retrieval, function calling, or summarization. You deploy thousands. They're fast and cheap.

The Flexibility Factor: Why SLMs are Like "Kids"

Beyond the corporate structure, there is a developmental advantage to going small.

Think of a massive LLM as a tenured university professor. They possess extensive knowledge of the world, history, or physics. However, if you attempt to change their fundamental behavior or teach them a completely new, hyper-specific internal company lingo, they are rigid. "Retraining" them takes months and is expensive.

SLMs are like brilliant children: malleable, but they need more practice. Like a student who needs repetition to master a new skill, SLMs often need more examples than a massive model to truly steer their behavior. But because they are so lightweight, this repetition is cheap. You can feed them thousands of examples to an open-source SLM like LFM2.5-1.2B-Instruct and iterate on the results in hours, allowing you to train your specific internal compliance document in a single afternoon.

SLMs adapt quickly to the tasks you specialize them for. If your business logic changes, you don't need to rebuild the brain; you just update the specialist. This makes them perfect for Agentic AI, where agents need to follow strict, changing protocols without hallucinating creative answers.

Why Has Adoption Been Slow? (And Why That’s Changing)

If specialized models are so effective, why isn't everyone using them yet?

The reality is that enterprise AI is hitting a wall. One of the underlying reasons is what Deloitte’s 2026 Tech Trends report calls an “AI infrastructure reckoning”. Why? The infrastructure simply wasn’t designed for AI at enterprise scale. Companies are trying to shoehorn massive, cloud-native models into real-world workflows, only to discover that API-based models that work well in a lab become cost-prohibitive, latency-bound and operationally fragile in production.

This is especially true for agentic workflows, where continuous inference loops drive token costs upward while cloud latency undermines real-time responsiveness. As Deloitte notes, these pressures are forcing organizations to rethink where inference runs, across cloud, on-prem, and edge, instead of assuming one-size-fits-all infrastructure.

In addition to the above, we are finally seeing the “middle management” layer of AI mature. While early routing projects (like RouteLLM) paved the way, we often lacked the enterprise-grade infrastructure to make them reliable in production. We now have mature, intelligent orchestration systems that can reliably look at a user query and say, “This is easy, send it to the SLM”, or “This is hard, wake up the LLM”, without the fragility of earlier experimental tools."

The Liquid Difference: Efficiency by Design, Not Compression

This is where the industry is pivoting, led by new architectures like Liquid Foundational Models (LFMs). Historically, the industry approached small models by taking architectures designed for massive LLMs and compressing them to fit a smaller footprint. Liquid AI takes a fundamentally different approach.

Instead of shrinking a giant brain, we engineer a more efficient one from the ground up. Our models are architected to be lean, fast, and hardware-aware by default (trained via hardware-in-the-loop). With significantly faster training cycles, you can specialize a Liquid model on your company’s specific data in days, not quarters.

Beyond the cloud, LFMs can be deployed directly at the edge: on laptops, smartphones, robots, IoT devices, and many more. This enables a powerful new agentic workflow where a router orchestrates between ultra-fast on-device SLMs for low-latency action and large cloud models for complex reasoning.

The Hybrid Agent in Action: Liquid Foundational Models

Let’s visualize how this looks in a real-world scenario.

Imagine a next-generation financial assistant integrated into your e-banking app. It is a voice-powered assistant running on your phone. You can ask all sorts of financial-related questions, ranging from very easy ones, like “How much did I spend this month on groceries?” to more complex and nuanced ones like “I have $50,000 saved, should I use it for a down payment on a house or keep renting and invest it?”

A monolithic solution based on a single frontier Language Models equipped with all possible tools looks appealing.

.png)

However, it has 3 real problems that just get worse as you grow:

- Ballooning Costs. A long list of tools means a higher token cost per request. You haven’t started to decode the response and your context window is half full. This negatively impacts the output’s quality.

- Latency increases over time. More tool descriptions to process means slower initial responses. If your team adds more tools to handle hard questions better, the latency for 80% of easy queries will be negatively impacted.

- Reliability suffers as you expand coverage. More tools mean more flexibility, but this also means more failure modes. Again, someone on your team working to improve the system for “hard” questions will likely cause a drop in performance for easier queries.

In other words, the monolith is hard to audit, iterate upon without breaking things, and has a very steep cost function.

What’s the alternative?

As with any successful ML/AI project, it all starts by analyzing the data you have. Log 2-4 weeks of real user queries and identify 80% of easy queries that fall into clear categories:

- Credit card spending/transactions

- Checking account balance/history

- Savings account queries

- Bill payments

- Recent transactions

- Etc.

From here, you can start iteratively building a modular system composed of:

- A lightweight orchestrator that assigns incoming questions to categories. A fine-tuned SLM works like a charm.

- A fleet of specialist SLMs, each of them able to answer inquiries from a given category using only a subset of tools. This means you get fast, high-quality responses without linearly increasing token usage costs.

- A fallback frontier LM to handle the most complex queries that no SLMs can tackle.

.png)

The modular system allows different team members to improve and add specialists without degrading performance or impacting latency for others.

.png)

The result is a more accurate, cheaper, and faster solution than the monolith. Bingo.

The Bottom Line

The future of AI isn't a single god-like model doing all the work. It is a swarm of both small and large language models, orchestrated to maximize output quality and minimize cost and latency.

For businesses, the race is no longer about who has the biggest model. It's about who can build the most efficient team of models.

The Future Is Hybrid and Specialized

The tools to execute this hybrid future are ready now. Build with Liquid's LFMs, task-specific models that can deliver cloud-quality intelligence at a fraction of the cost and energy.

Start building today:

- Explore: Discover the LFM Model Family.

- Experience: Test the latency yourself in the Liquid Playground.

- Build: Dive into the Docs and learn how to fine-tune in hours.

- Scale: Get in touch with Liquid AI to transform your workflow.

.svg)