Building AI applications today means dealing with cloud inference—and all the latency, cost, and privacy challenges that come with it. What if you could run powerful AI models directly on your users' laptops with sub-100ms response times?

Today, we're excited to share how we're bringing LEAP—our edge AI platform—to everyday PCs and laptops, starting with our LFM models running on AMD Ryzen processors.

The Hardware Fragmentation Challenge

Edge AI deployment faces significant complexity: x86 and ARM architectures, multiple GPU vendors, and three major operating systems (Windows, macOS, Linux) each with distinct requirements. Building native support for every combination would require enormous engineering resources and time.

We're taking a community-first approach. Rather than building everything from scratch, we're partnering with established tools that developers already use in production.

llama.cpp as Our Foundation

Our first integration target is llama.cpp—the de facto standard for efficient inference on consumer hardware. Its mature ecosystem and active community have already solved many critical optimization challenges.

By supporting llama.cpp as our inference engine, LEAP integrates seamlessly into existing workflows:

- Python developers can use our bindings alongside their current toolchain

- Node.js developers get native performance without C++ complexity

- The entire llama.cpp ecosystem of tools and optimizations works immediately

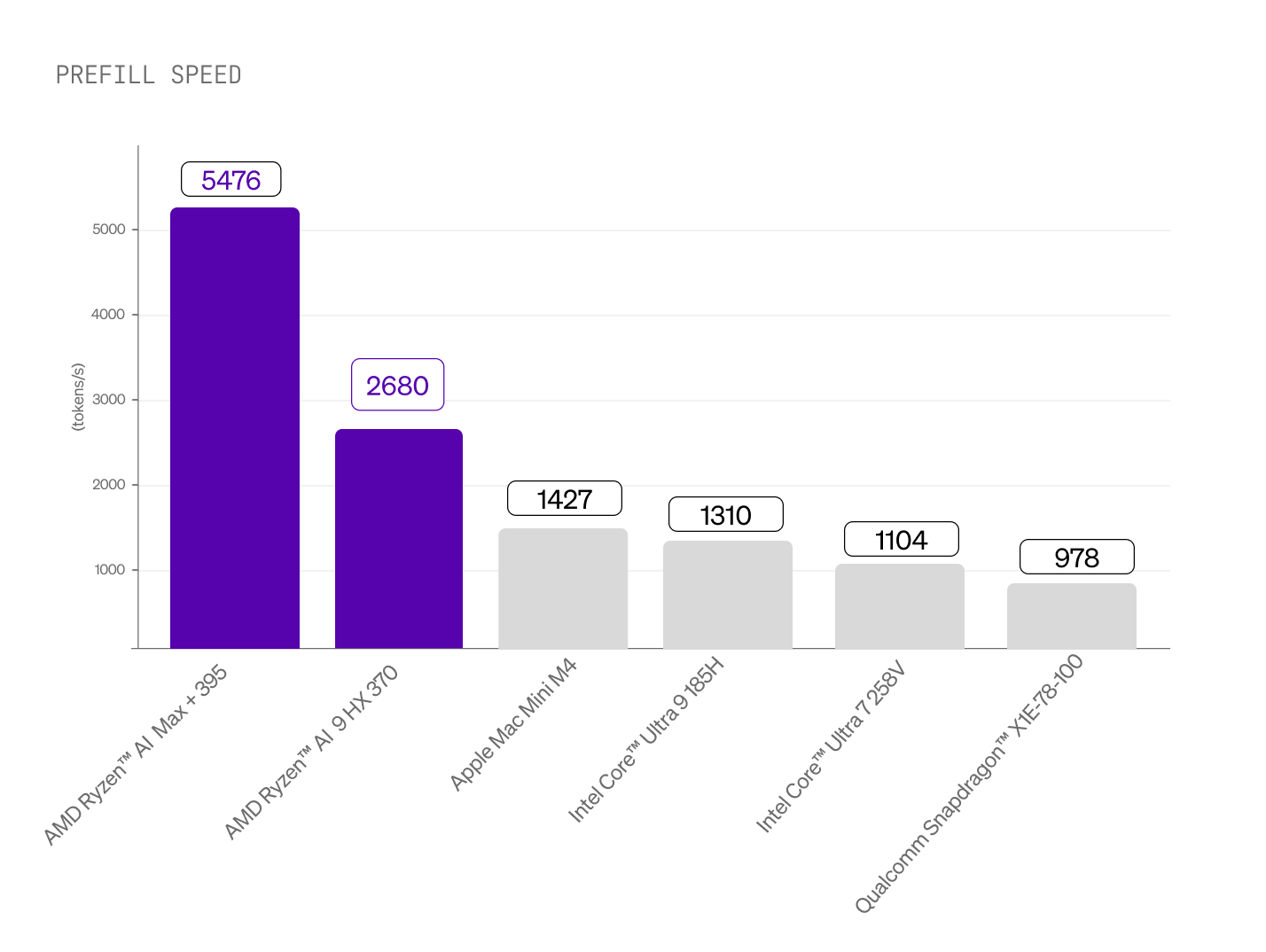

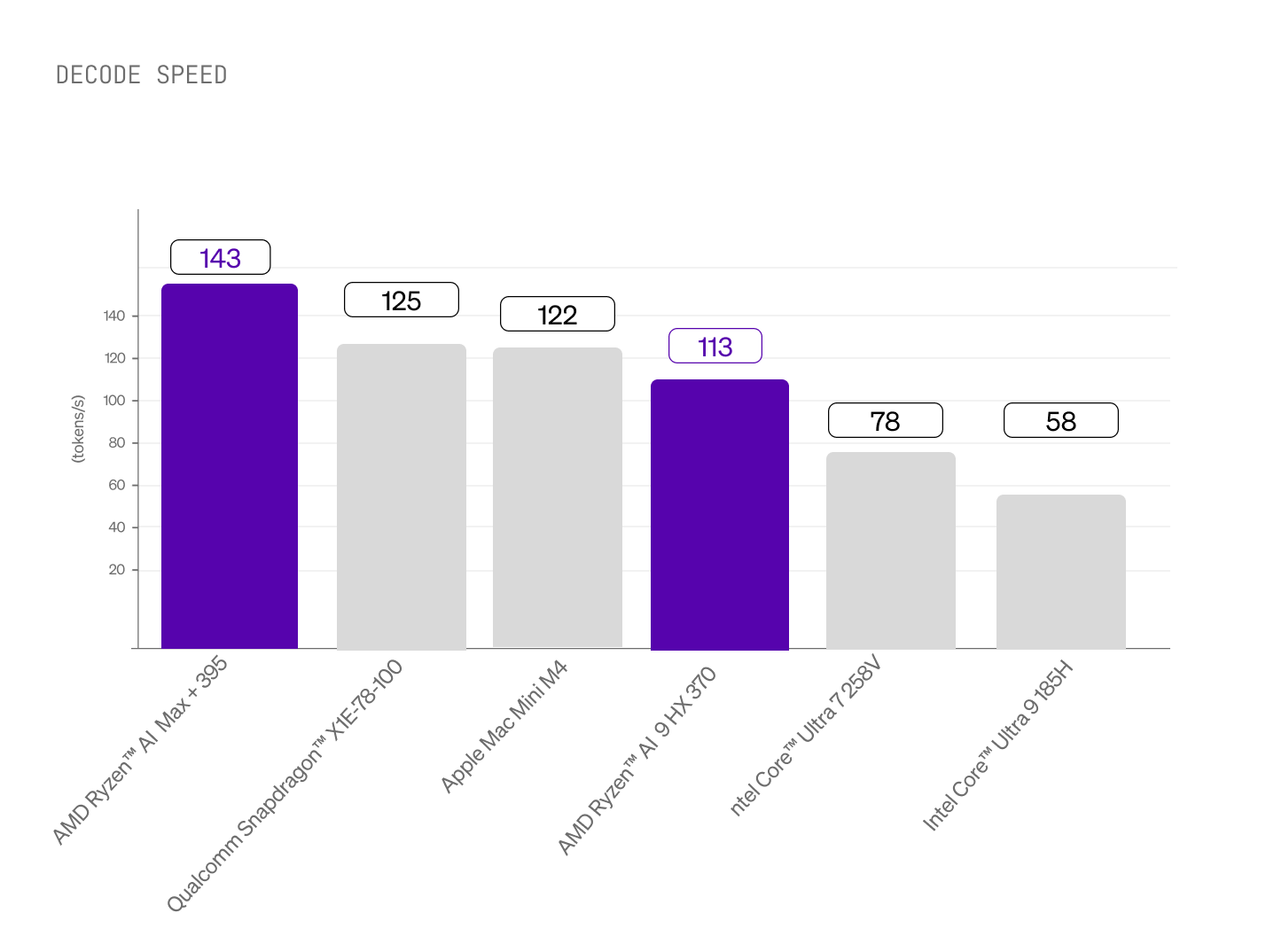

After extensive benchmarking across consumer hardware, AMD Ryzen AI processors deliver the best performance for LEAP deployments. In Figure 1, we compare the performance measure in tokens per second (higher is better) for both prefill (prompt-processing) and decode (token generation) operations of LFM2-1.2B-Q4_0.gguf. For a fair comparison we use the publicly released `llama-bench` executables from the main llama.cpp GitHub repository with all available pre-built backends, including all available GPU backends, as well as for the CPU backend we vary the number of threads (4, 8, and 12), and report the maximum values observed for each hardware.

Integrated Radeon Graphics for Prompt Processing

The integrated GPU handles prompt processing at speeds comparable to discrete GPUs—without additional cost, power draw, or integration complexity. This enables genuinely real-time application experiences.

Superior CPU Decode Performance

Token generation leverages AMD's Zen 5 architecture with advanced vector instruction sets, delivering some of the fastest decode/generation speeds measured on consumer hardware. This combination provides GPU acceleration for prompt processing and optimized CPU performance for streaming generation—all in a single chip that's already deployed in millions of devices.

Building with LEAP

With LEAP running locally via llama.cpp on AMD hardware, developers can now build:

- Privacy-first applications where sensitive data remains on-device

- Real-time AI features with consistent sub-100ms latency

- 100% local software that functions without internet connectivity

- Cost-effective solutions eliminating cloud inference expenses

The same code runs wherever llama.cpp is supported, ensuring no vendor lock-in.

Getting Started

We've created comprehensive documentation on our LEAP platform covering:

- Installing LEAP with llama.cpp backend

- Loading and running LFM-2 models

- Integration with Python and Node.js applications

- Performance optimization for AMD Ryzen hardware

The SDK is available today with benchmarks and example implementations.

What's Next

This AMD integration represents the first step in making powerful AI ubiquitous on edge devices. Our immediate roadmap includes:

- LFM-2-VL support: Bringing our vision-language models to edge devices, enabling multimodal applications that process both text and images locally

- Further memory and power optimizations, leveraging GPUs and NPUs

- Integration with additional inference engines beyond llama.cpp

The future of AI extends beyond data centers to the devices your users already own. We're building the infrastructure to make that future accessible to every developer.

Availability

The LEAP SDK with AMD acceleration is available today, supporting the LFM-2 model families. Developers can explore tools, benchmarks, and documentation at leap.liquid.ai.

How to Get Started

If you are interested in custom solutions with edge deployment, please contact our sales team at sales@liquid.ai.

.svg)